Introduction

For a first post i would like to introduce myself to my reader! My name is Jack McCallum, as of 2014 I am a student a Academy of Interactive Entertainment learning game development using mostly C++ with OpenGL and Visual Studio. In this blog I will be keeping track of the things I am covering in my class time a as well as products/applications I have worked on. Providing links to small applications that anyone can have a go at along with some pictures/videos I capture along the way.

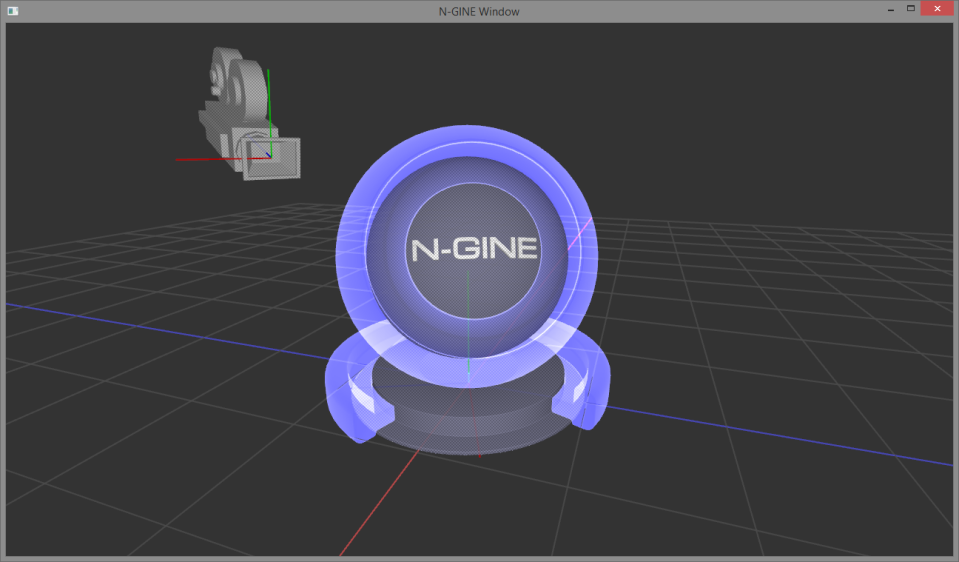

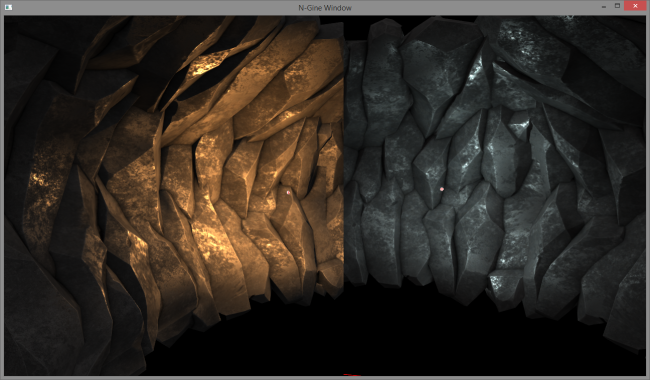

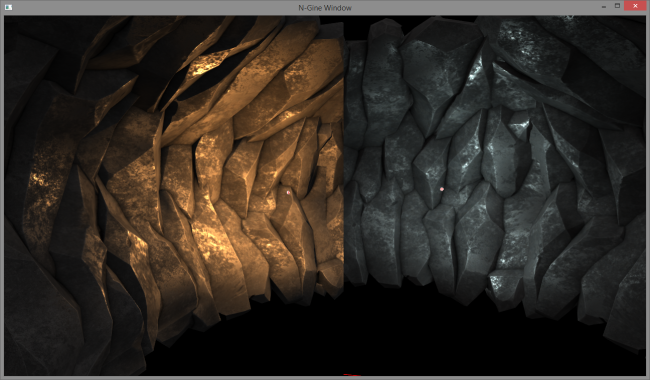

N-Gine

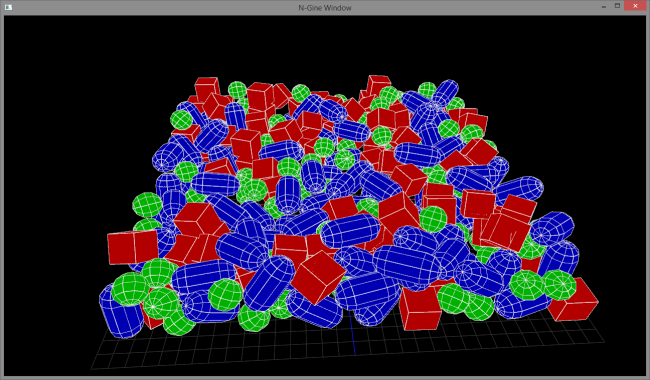

This here is the main project I am currently working on, a lot of my smaller projects have hit walls due to my lack of knowledge in certain areas and it has required me to take a step back and start working on the elements of a game engine from rock bottom, so I can obtain a strong understanding on how each individual thing works. I am calling this project N-Gine short for Nugget Engine, based on a nick another programming buddy has given me. As of today, N-Gine is less of an engine and more of a small framework consisting of a bunch of wrapper classes for OpenGL with a few extra perks such as logging and mesh loading, things such as window handling and texture loading are handled by third party libraries such as GLFW and SOIL, I am trying to polish the really low level foundation as much as I can before i move onto the next level of abstraction.

An executable will not be provided for this yet. Hang in there and I will release one soon enough.

Asset provided by John Costello http://johncostelloart.com/

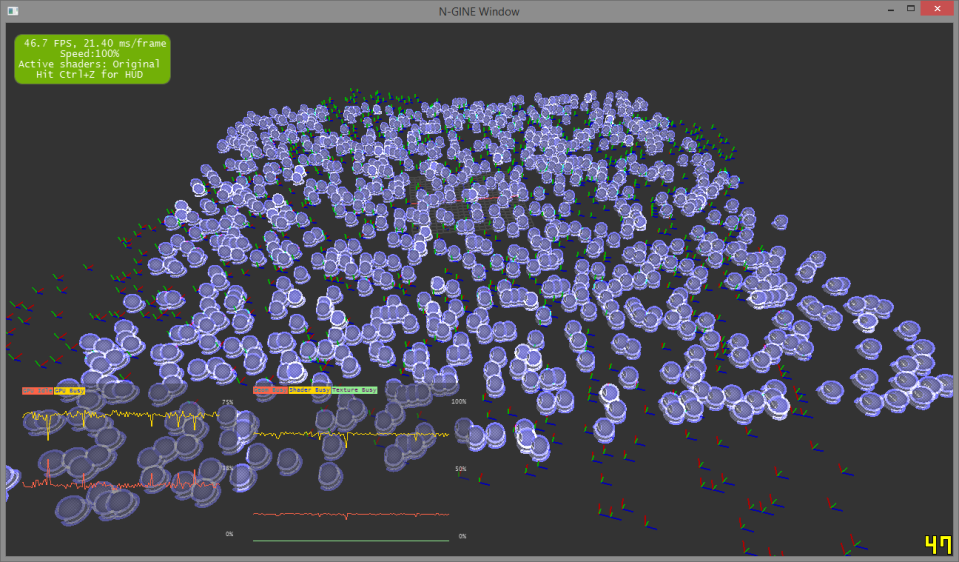

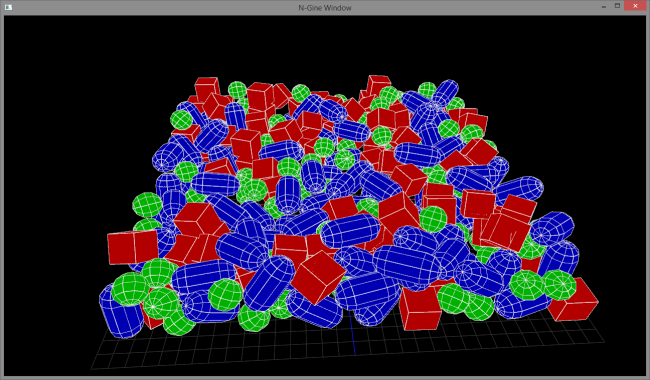

PhysX

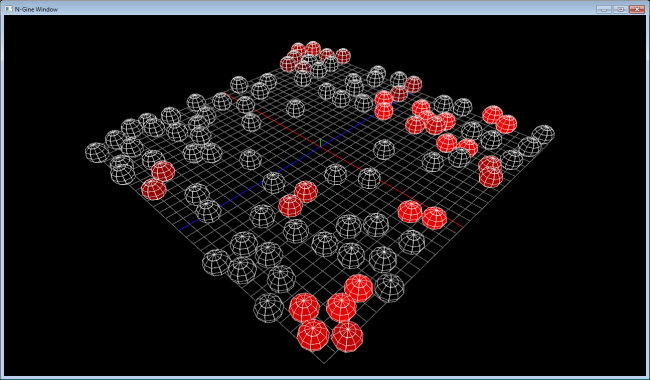

Apart of my assignment that my class is currently working on I am required to use the PhysX libraries to create a physics scene simulation. I have not spent much time on this but bellow is a picture of my progress on it. So far i have added 150 capsules boxes and spheres. PhysX has been designed well so its pretty straight forward to use. Because of this, I am required to make my own implementation.

Demo link provided below.

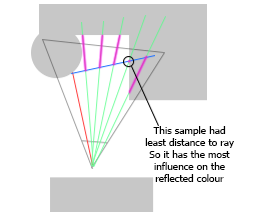

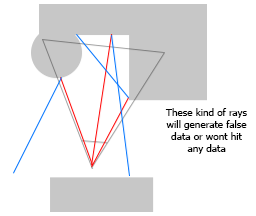

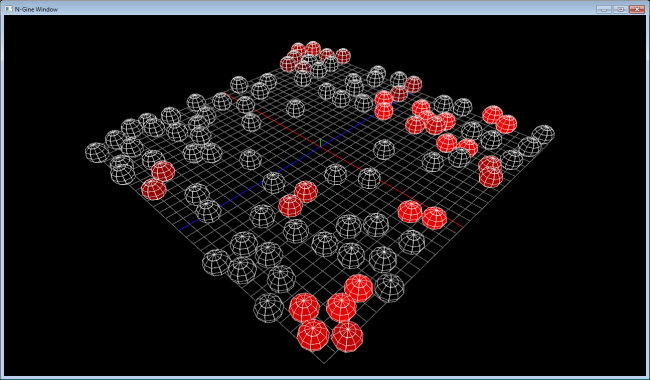

Custom Physics

As I said above, I am required to build my own physics implementation. I have been working on this the most and doing my best to understand the simplified pseudo algorithms used to build real time physics engines. So far i have sphere-sphere collision detection with linear collision response.

Demo link provided below.

Link to built executables for you to try:

https://dl.dropboxusercontent.com/u/62003039/Hosted%20Files/Demos%2019-5-14.7z

-37.839048

144.974409